We are excited to release a-Gnostics 2.0, the service for rapid development of predictive analytics models, and would like to share details about the platform architecture and the new features available in this release.

Background

a-Gnostics, SoftElegance company, implements an Industrial AI service focused on anomaly detection and equipment failure prediction. The service is tailored to multivariable processes and timeseries data, retrieved from industrial equipment to automatically and accurately indicate normal, pre-failure, and failure statuses. The main objective is to apply machine learning and artificial intelligence to predict failures before they occur.

There are a variety of services known as A-SETS that are offered to the customers by the a-Gnostics platform.

The A-SETS all fall into the following high-level categories:

The list of services is getting extended based on new use cases and implementations of industrial AI and machine learning. For example, a recently added a-Gnostics service is the solution to electrical motor failures using failure prediction to help manufacturers reduce costs of repairs and maintenance.

Introduction

One of the main challenges of industrial AI/ML solutions is a need to process a variety of data from different sources. A flexible infrastructure that can scale proportionally to host the data and execute hundreds and thousands of models is also essential. More complexity is added with high security, compliance, data, and model governance requirements. The a-Gnostics platform addresses all these challenges via the abundance of out-of-the-box features and customization capabilities that allow tailoring the services to client’s needs.

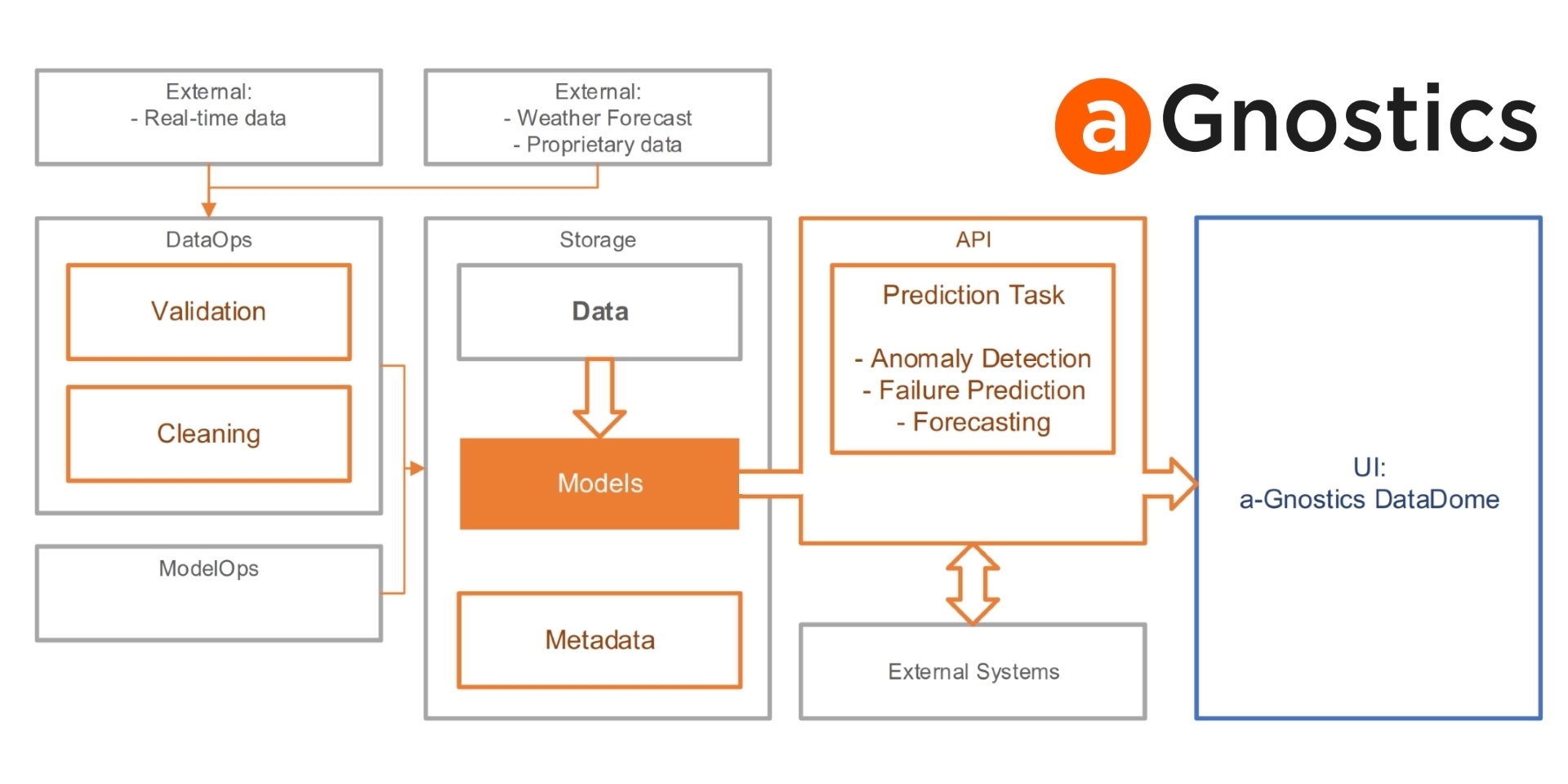

The diagram above shows the high-level architecture of the a-Gnostics platform (the most challenging tasks are shown in orange on the diagram).

The system is composed of the following functional components:

One of the key principles and advantages of the a-Gnostic platform is its independent infrastructure, in terms of where the services may be deployed and launched. a-Gnostics supports multiple deployment and consumption models:

The video shows an overview of the tasks that need to be solved to develop an automated data pipeline for analyzing industrial data using machine learning methods.

Modern enterprise encompasses heterogeneous and complex digital environments. To obtain a high-quality training dataset, it is important to have several standard equipment connectors to get the raw data, processing modules to validate and clean the data, and storage that can handle high volumes and variety of data types and formats.

As companies are gradually moving their infrastructure to the cloud, the data gathering tasks from industrial equipment may be streamlined by utilizing cloud services such as AWS IoT Greengrass, AWS IoT SiteWise, Azure IoT Edge, and more. This also reduces the costs of these integrations and allows greater focus on building AI/ML models and services.

The main development language for the platform is Python. The system consists of modules and follows the microservices architecture. Each service is represented as a Docker container.

Data

The data processed by the a-Gnostics platform can be categorized below:

Regardless of the data categories, most of the data that a-Gnostics processes is time-series data.

To highlight, high-quality and complete datasets is an essential prerequisite for building accurate models, especially in such an area as industrial AI. It is also very important to provide comprehensive data visualization so end users can easily interpret the data and make fast decisions.

DataOps

a-Gnostics utilizes multiple data processing patterns, such as ETL (Extract Transform Load) and ELT (Extract Load Transform). These processes may be automated by utilizing DataOps techniques. DataOps techniques provide automation for building, deploying, and executing data pipelines to achieve scalability to thousands of data processing tasks and AI models.

a-Gnostics provides a number of ready-to-use data pipelines for converting the raw data to learning datasets for the energy industry, as well as the data processing for general purposes, like weather, which are used in a variety of tasks.

Raw industrial data needs to go through stages of verifications, cleansings, and transformations.

Specialized reusable modules have been developed for that, allowing easy integration into data pipelines.

This collection of data pipelines, reusable transformation modules, and utilizing standard frameworks and libraries streamlines the implementation process and allows concentrating on unsolved problems by avoiding building from scratch.

In terms of using standard frameworks, Apache Airflow is a good example of when a framework is ideal for tasks that involve a complex chain consisting of multiple datasets.

In summary, componentization, reusability, and the best practices and frameworks, provided by the a-Gnostics platform is a very powerful capability on the data processing side.

The data governance framework maintains all necessary meta information about the datasets: versions, original data sources, timing of creation, associated tasks, etc. This provides good visibility for managing, tracking, and analyzing the processes.

Data Storage

a-Gnostics stores the following entities:

PostgreSQL is used as a data store for meta information, model results, and processed datasets. In some cases, the data is represented by files of different formats that are stored in the file system or cloud services such as AWS S3, Azure Blob, Azure FileShare, etc. There are exceptions when it is more optimal to put real-time data into specialized storages for working with time series databases.

Models

Development of machine learning models for the Industry 4.0 is a challenging task. A variety of problem types, data sources, etc. carries the risk of slipping into custom development for each model. Therefore, it is important to focus on groups of tasks that are as common as possible for their categories and with good potential for reusability in related areas. To solve real-life tasks in each domain area, deep and wide knowledge of machine learning techniques is required. For example, in tasks like forecasting the consumption/generation of energy commodities (electricity, natural gas, etc.), or predicting the behavior and breakdowns of equipment, it is required to apply both supervised, unsupervised, and semi-supervised learning. Additionally, it is very useful to have a wide outlook and knowledge in the related field to be able to add a new synthetic feature to the model, based on classic math, physics, or chemistry concepts. This combination of approaches helps achieving better model accuracy.

To make it possible to scale models to hundreds of objects, and train thousands of models, it is critical to automate all stages of model building: data preparation, model training, storage and application to industrial operations. A-Gnostics uses the best practices of the ModelOps approach for the lifecycle management of machine learning models. While we try to utilize standard frameworks such as MLFlow as much as possible, the proprietary services that integrated with MLFlow were built for such tasks as model registry.

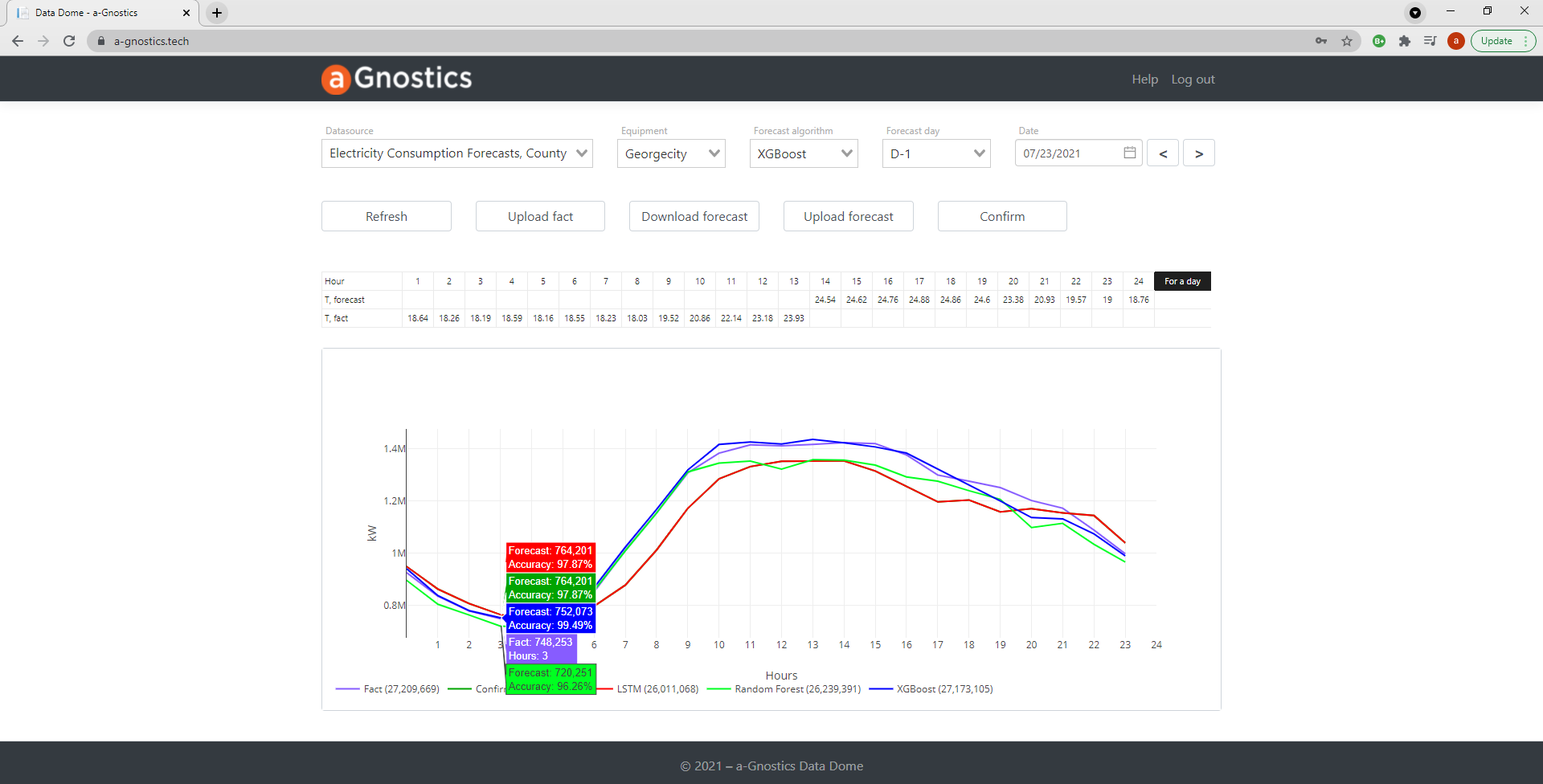

Out-of-the-box, a-Gnostics contains a number of pre-built pipelines for training and deploying models. It allows use of a combination of industry standard algorithms (LSTM, XGBoost, Random Forest) and unique models or complex chains. To automate the process of choosing the most precise model for regression problems, a special walking forward algorithm that accepts a dataset and a set of models as input was developed. As a result, the algorithm returns the most precise model for specific tasks, along with detailed metrics for each model.

To simplify the work with common problems, wrapper classes were created around the models that make it easier to work with regression tasks for timeseries data.

A good example illustrating the importance of applying ModelOps automation is the task of forecasting of electricity consumption. Mathematically this is a regression problem for timeseries data. In theory retraining the model for the timeseries data should be done as often as possible so that the model remains relevant to the input data stream. New data (facts of electricity consumption) are received every day. After conducting a series of experiments, we determined that for long term benefits it is more profitable to retrain the model every day (while still being able to change the length of the training dataset from time to time). As a result, having N objects for forecasting, as well as M different models, it is required to train N*M models daily. Even with small values of M and N, it is not possible to perform this task manually.

a-Gnostics enables consumption of the models in the production based on the Model-as-a-Service (MaaS) principle. For example, to get an electricity forecast, the consumer needs to connect with the corresponding module (ElectricityService) via the REST API. A-Gnostics’ modular architecture allows for consumption functionality via an API on the module level.

API

To have a fully functional production system, a simple execution of the model is not enough. A software interface with multiple servicing functions is required, so the consumer can interact with the model. For example, data such as the next day manufacturing plan should be supplied to the model to get the forecast.

For these purposes a-Gnostics provides a variety of API, containing different sets of calls for different tasks, for example, api/v1/forecast gives access to forecast functions, and api/v1/equipment opens the access to industrial equipment data.

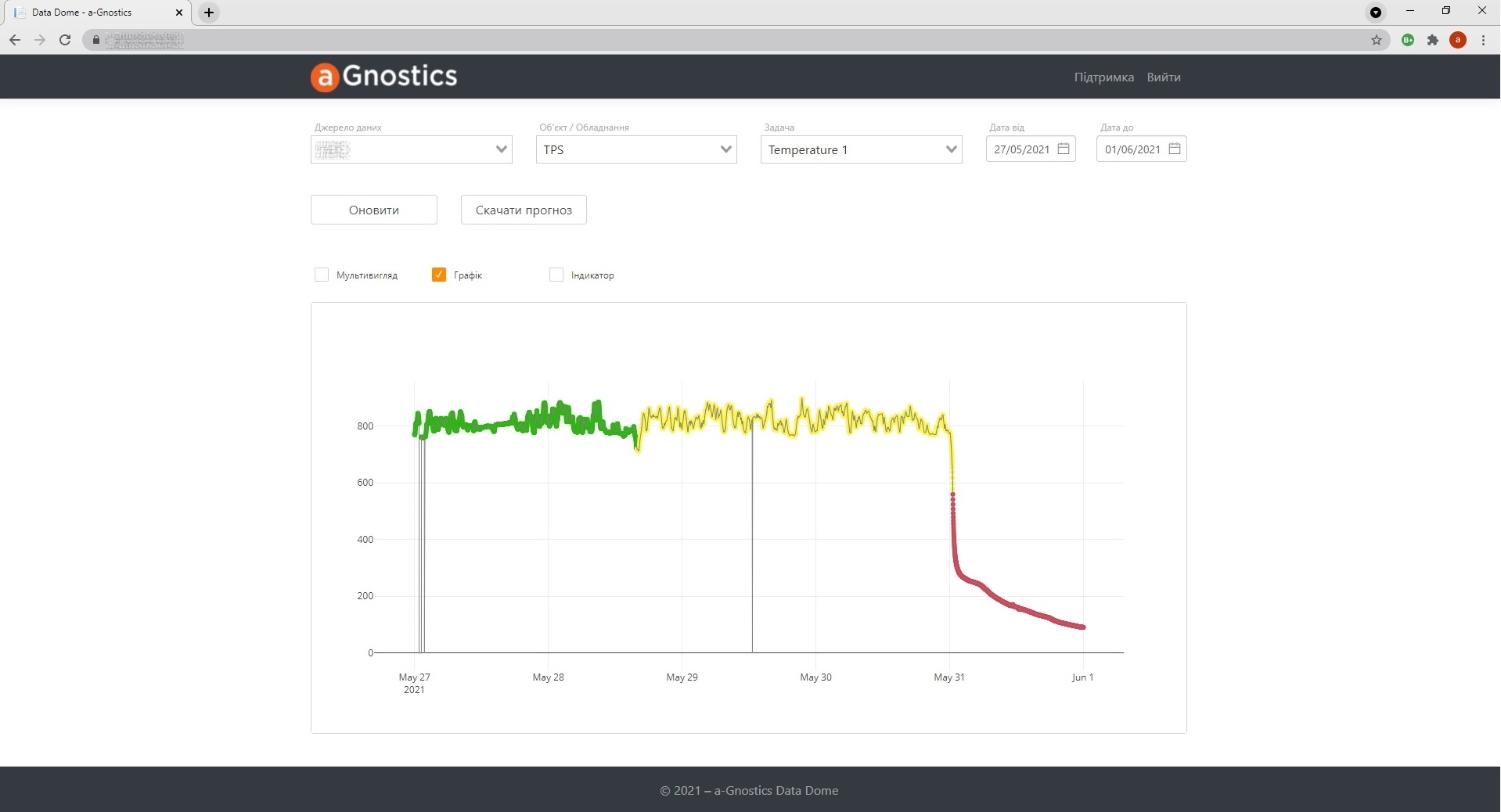

In addition to API, a-Gnostics provides data visualization capabilities via DataDome, a user interface that enables end users to get the full view on the data and to make data-driven decisions.

Security

High Security standards are important to follow for any software product, especially for a platform that integrates data and systems across organization. Implementation in the enterprise environment is accompanied by a very scrupulous check by the Information Security Department. To be ready in advance and to minimize the number of iterations during deployment, our team follows the best security practices during implementation, routinely conducting static and dynamic security scans and addressing all potential vulnerabilities.

We also try to use up-to-date versions of all 3rd party components and services, to stay current with security requirements.

If the services are launched in the cloud, it is better to avoid running on virtual machines and recommended to use the appropriate cloud services (AWS ECS, AWS EKS, Azure AKS, Azure App Service, etc.) instead.

Conclusion

The a-Gnostics platform is successfully used by leading energy and agritech companies, as well as clients from other equipment-heavy industries.

As of August 2021, more than 100,000 trained models were used in production, and more than 1,000,000 forecasts were executed.